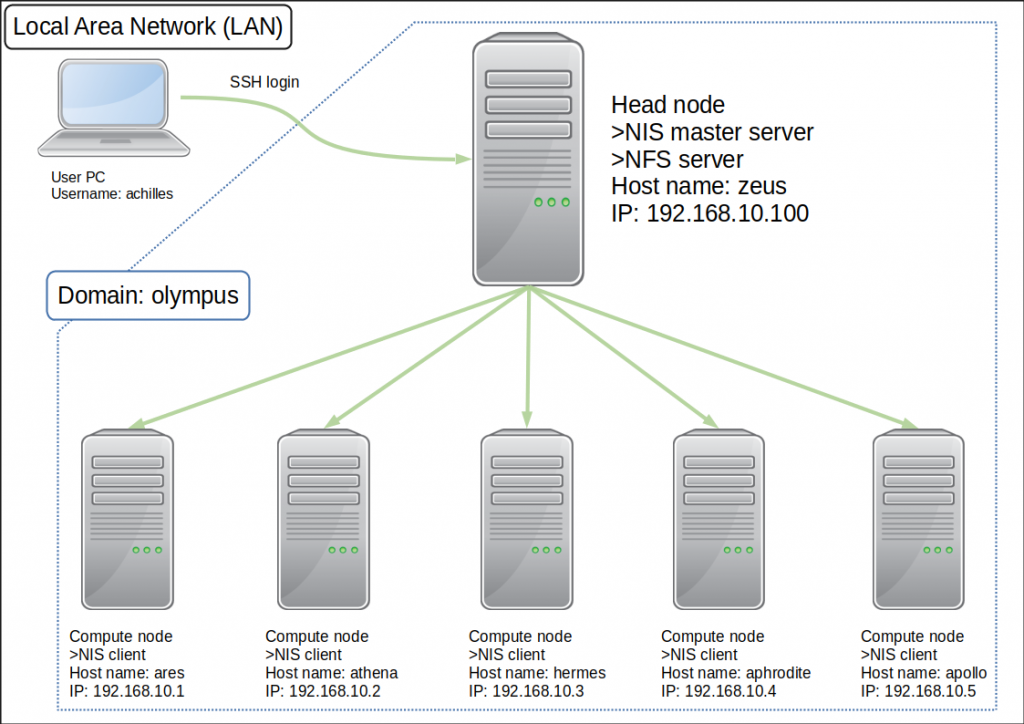

I am back with a blog, this time about configuring SSH key-based login, Network Information Service (NIS), and Network File System (NFS), which are considered 3 of the most important aspects of setting up a computer cluster, big or small. By a cluster, I am referring to a network of computers comprising of a head node and several compute nodes. I gave a rough example in Figure 1. I will be using the same IP address values, hostnames, etc. as examples in my succeeding explanations.

Whenever we upgrade our OS, of course, we have to set up everything from 0, system configurations, network settings, etc. Those are things that you don’t do frequently so you almost forget how you go about doing it and most of the time, new OS releases specify different ways of configuring your system. So you have to search the web for documentation and tutorials. Configuring SSH key-based authentication, NIS and NFS are almost always done as a set, so I prefer finding a good tutorial that tells me how to do them in one sweep, something I haven’t been able to achieve. I thought, probably, I am not the only one longing for this, sort of, one-stop tutorial and that writing one myself would probably help others. Hence, this blog was born.

The procedures that will be discussed here will be based on the Ubuntu Server 20.04 OS which is assumed to be installed in both the head and compute nodes. It is also assumed that you have created an admin user and normal users in all nodes.

In this blog, please note that in all code boxes, usernames, domain names, hostnames, IP addresses are just examples and should be replaced based on your network configurations. Texts following “//” are comments I have written for clarity purposes. Now, let’s get started.

Setting up SSH key-based authentication

In order to be able to use SSH key-based login, normal users should, first, create an SSH key pair. In the command below, I recommend not setting a passphrase because it will defeat the purpose of being able to log in without being asked to input anything.

achilles@myubuntu:~$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/achilles/.ssh/id_rsa): # Enter or input changes if you want

Created directory '/home/achilles/.ssh'.

Enter passphrase (empty for no passphrase): # set passphrase (if set no passphrase, Enter with empty)

Enter same passphrase again:

Your identification has been saved in /home/achilles/.ssh/id_rsa

Your public key has been saved in /home/achilles/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:7gvfoJosmvKWbihuOIap@povHeopnjbmXaUylmSuVgD achilles@myubuntu

The key's randomart image is:

// some ascii characters are usually displayed hereOnce you have created your key pair, copy it to the server where you also have an account, using the command below.

achilles@myubuntu:~$ ssh-copy-id -i ~/.shh/id_rsa.pub achilles@192.168.10.100You will be asked for your login password so type it and press enter. Once the command finished successfully, you should try logging in using your ssh key.

achilles@myubuntu:~$ ssh -i ~/.shh/id_rsa achilles@192.168.10.100You should be able to log in without being asked for a password. You will be asked if you want to add the server you are logging in to the list of known_hosts. Just answer yes to that and you will not be asked anymore the next time you log in.

A convenient way of logging in to a remote server is by creating a config file that holds your ssh login information. You can create one by following the instructions below.

achilles@myubuntu:~$ cd .ssh/

achilles@myubuntu:.ssh$ vi config

// input the following information for every remote server you login

Host zeus

HostName 192.168.10.100

User achilles

IdentityFile ~/.ssh/id_rsa

// you can then login by simply issuing the following command

achilles@myubuntu:~$ ssh zeusOnce every normal user has finished setting up SSH key-based authentication, the admin user should disable password-based login for all of them by modifying the /etc/ssh/sshd_config file.

root@zeus:~# vi /etc/ssh/sshd_config

// write at end of file if you want to disable password login

// for achilles, prometheus and odysseus

Match User achilles,prometheus,odysseus

PasswordAuthentication no

// save the file then restart ssh service

root@zeus:~# systemctl restart sshIf a job scheduling application such as Torque or OpenPBS is going to be installed in combination with NIS and NFS services, normal users should add the contents of the id_rsa.pub (public key) file to the authorized_keys file. If there is no such file yet, create one. Doing that when the NFS service is activated and the server /home directory is mounted in the compute nodes prevents IO errors in user home directories after jobs have finished execution. That is, it allows results of job executions to be written in user home directories.

Setting up Network Information Service (NIS)

NIS is, sort of, a directory service that enables sharing of information, such as hostnames, usernames, and passwords, between hosts within a network. This information is managed in one location (master server) and is accessed by client servers whenever needed. Depending on the number of clients available, slave servers may also be employed. Slave servers also maintain the same information as the master server but are accessed only during times when the master server is inaccessible or when the network is bogged down by heavy traffic.

Switch to the admin user account you have created previously then to the root user. That step may be a little cumbersome, you might say, but it gives you some sort of security because you can restrict access to the root account by restricting access to the admin user to a select few.

Before installing the necessary packages, update the repository first. Let us, first, configure the NIS master server.

admin@zeus:~$ sudo su -

[sudo] password for admin: // type password at the prompt

root@zeus:~# apt update // update repositoriesThe last command above updates the registered package repositories before returning to the prompt.

Next, install the NIS package.

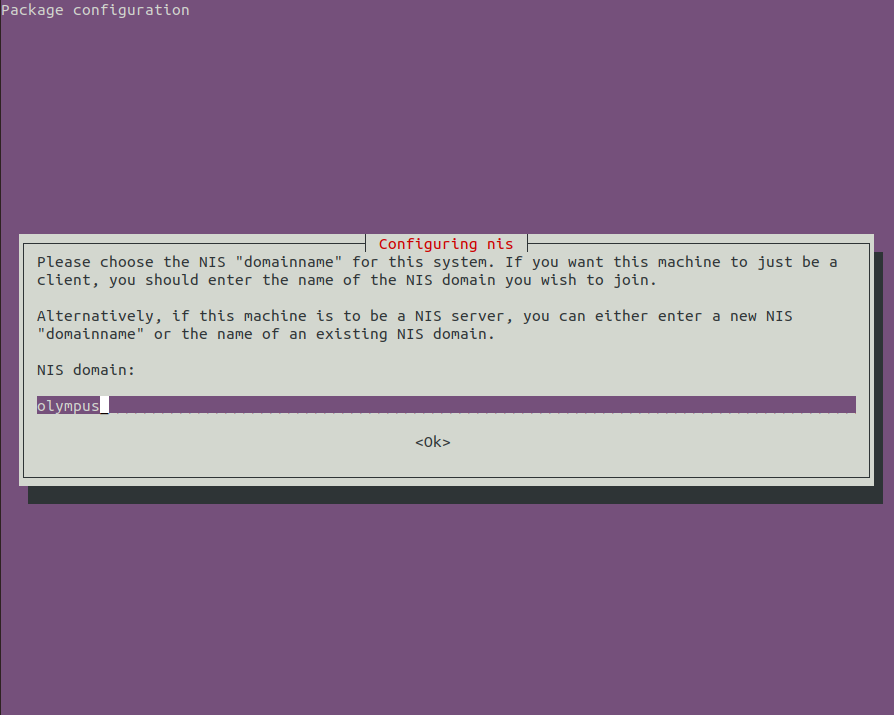

root@zeus:~# apt -y install nisThe command above will start the installation of the nis package and will prompt you for a domain name. The domain name is not the internet domain you are using, rather, it is some sort of a name you have decided to assign to the network you are in. An example screenshot is shown below.

Continue with the following instructions.

// edit the /etc/default/nis file.

root@zeus:~# vi /etc/default/nis

// make the following change indicate below

NISSERVER=master

//edit the /etc/ypserv.securenets file

root@zeus:~# vi /etc/ypserv.securenets

// comment out the line containing '0.0.0.0' as indicated below

# This line gives access to everybody. PLEASE ADJUST!

# 0.0.0.0 0.0.0.0

// at the end, add range of IP addresses you allow access

// in the example below all hosts that belong to the 192.168.10.0 network

// will be allowed access

255.255.255.0 192.168.10.0

// edit the /etc/hosts file

root@zeus:~# vi /etc/hosts

// keep the localhost uncommented

127.0.0.1 localhost

// add IP addresses of hosts that are allowed to use NIS

// below are sample entries for a master server and 5 clients

192.168.10.100 zeus

192.168.10.1 ares

192.168.10.2 athena

192.168.10.3 hermes

192.168.10.4 aphrodite

192.168.10.5 apollo

// restart rpcbind and nis services

root@zeus:~# systemctl restart rpcbind nis

// update the NIS database

root@zeus:~# /usr/lib/yp/ypinit -m

At this point, we have to construct a list of the hosts which will run NIS

servers. server is in the list of NIS server hosts. Please continue to add the names for the other hosts, one per line. When you are done with the

list, type a <control D>.

next host to add: zeus

next host to add: # Ctrl + D key

The current list of NIS servers looks like this:

zeus

Is this correct? [y/n: y] y

We need a few minutes to build the databases...

Building /var/yp/olympus/ypservers...

Running /var/yp/Makefile...

make[1]: Entering directory '/var/yp/olympus'

Updating passwd.byname...

Updating passwd.byuid...

Updating group.byname...

Updating group.bygid...

Updating hosts.byname...

Updating hosts.byaddr...

Updating rpc.byname...

Updating rpc.bynumber...

Updating services.byname...

Updating services.byservicename...

Updating netid.byname...

Updating protocols.bynumber...

Updating protocols.byname...

Updating netgroup...

Updating netgroup.byhost...

Updating netgroup.byuser...

Updating shadow.byname... Ignored -> merged with passwd

make[1]: Leaving directory '/var/yp/olympus'

zeus has been set up as a NIS master server.

Now you can run ypinit -s zeus on all slave server.The NIS server configuration is now complete. However, you should run the following command whenever you add new users to your network.

root@zeus:~# cd /var/yp

root@zeus:/var/yp# makeSo now, let’s get on to setting up the NIS clients.

As with the configuration of the NIS server, the configuration of the NIS client also starts with updating the repositories after switching to the root user. That is actually the basic routine whenever you are installing new packages or applications in a Linux system. Then, you also install the NIS package using the same command: apt -y install nis. That will also prompt you for the NIS domain name (Figure 1). Enter the same domain name as before then press enter. Next, perform the following steps.

// edit the file /etc/yp.conf

root@ares:~# vi /etc/yp.conf

#

# yp.conf Configuration file for the ypbind process. You can define

# NIS servers manually here if they can't be found by

# broadcasting on the local net (which is the default).

#

# See the manual page of ypbind for the syntax of this file.

#

# IMPORTANT: For the "ypserver", use IP addresses, or make sure that

# the host is in /etc/hosts. This file is only interpreted

# once, and if DNS isn't reachable yet the ypserver cannot

# be resolved and ypbind won't ever bind to the server.

# ypserver ypserver.network.com

#

// add the following information to the file

domain olympus server zeus

// make modifications to the /etc/nsswitch.conf file.

root@ares:~# vi /etc/nsswitch.conf

// add 'nis' as indicated below

passwd: files systemd nis

group: files systemd nis

shadow: files nis

gshadow: files

hosts: files dns nis

// restart the rpcbind and nis services.

root@ares:~# systemctl restart rpcbind nisNow that you have completed the setup, check if it’s working fine by executing the command ypwhich. That should tell you the name of your NIS server.

root@ares:~# ypwhich

zeus

root@ares:~# Setting up Network File System (NFS)

Network File System (NFS) is a protocol that allows a file system to be simultaneously mounted on client servers. This allows users logged in on client servers to access their home directory in the master server much like accessing a local file system. Assuming that the master server /home folder is mounted in the client servers’ /home folder and each user’s public key is added in the authorized_keys file, it will not require the installation of user ssh public keys in the client servers themselves. In cases where a job scheduling system is being used in a cluster, it also assures that there will be no write permission errors after executions of jobs in the compute nodes (client servers).

Now, let’s configure the NFS server first.

// install the NFS server package

root@zeus:~# apt -y install nfs-kernel-server

// modify the /etc/idmapd.conf file:

root@zeus:~# vi /etc/idmapd.conf

// uncomment then change to the correct domain name

Domain = olympus

// save and close file

// make changes to the /etc/exports file.

root@zeus:~# vi /etc/exports

// allow the /home folder to be exported to your network

// where your computer cluster is located by adding the line below

/home 192.168.10.0/24(rw,no_root_squash)

// After editing and saving the file, restart the nfs-server.

root@zeus:~# systemctl restart nfs-server

// find the UUID of the /home folder in your file system

root@zeus:~# blkid

// executing the command above will show the UUID of

// every partition in the file system

// take note of the UUID of the partition where the /home folder is mounted

// you will need it in the next sectionNow, let’s take care of the NFS clients. Log in to one of the clients and switch to the root user. Then perform the following procedure.

// install the NFS package

root@ares:~# apt -y install nfs-common

// edit the /etc/idmapd.conf file

root@ares:~# vi /etc/idmapd.conf

// uncomment then set to proper domain name

Domain = olympus

// save and close the file

// edit the /etc/fstab file

root@ares:~# vi /etc/fstab

// add the following at the end of the file if you are mounting

// at the /home folder, otherwise, change to preferred mount point

// replace <uuid> with the UUID you found above after issuing the

// blkid command

UUID=<uuid> /home xfs defaults 0 0

// save and close file

root@ares:~# vi /etc/fstab

To enable dynamic mounting let us configure autofs.

// install the autofs package

root@ares:~# apt -y install autofs

// edit the /etc/auto.master file

root@ares:~# vi /etc/auto.master

// add to end of file

/- /etc/auto.mount

// save and close the file

// create the /etc/auto.mount file

root@ares:~# vi /etc/auto.mount

// add the following at the end of the file

/home -fstype=nfs,rw zeus:/home

// save and close file

// restart autofs

root@ares:~# systemctl restart autofsNow that you have finished configuring NFS in one of the clients, repeat the same procedure with all the other remaining clients.

To check if the /home folder is properly mounted, log in with a normal user account to the NFS server (head node) then execute ‘ls -ltra’. Then, log in to any client using the same account. You should be able to log in via ssh key authentication without any problem. Once you are logged in, issue the same ‘ls -ltra’ command. You should be able to see the same file list as when you executed the same command when you logged in to the NFS server.

And that’s it, you already have a computer cluster with a working NIS, NFS, and SSH authentication system.

See you again soon!

Category: Linux関連